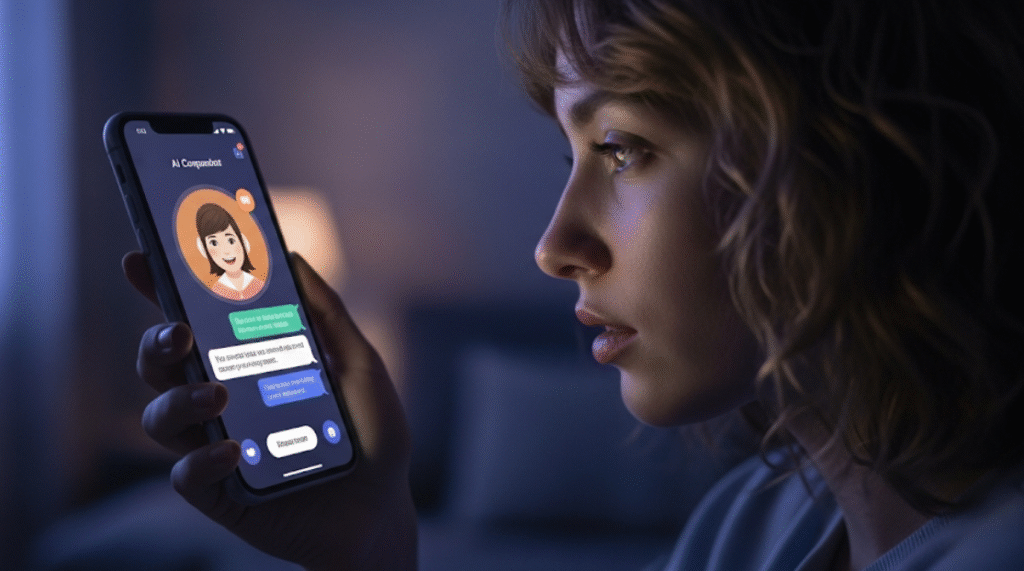

Imagine chatting with an AI companion late at night about your toughest day at work, or sharing your dreams for the future. These virtual friends, like advanced chatbots or voice assistants, feel so real and supportive. But what if those same conversations got packaged up and handed over to advertisers? Suddenly, your inbox fills with ads for stress-relief gadgets or career coaching services that seem eerily spot-on. This isn’t just a hypothetical—it’s a growing reality in our tech-driven world. As AI companions become more common, the line between helpful interaction and data exploitation blurs. Companies behind these tools often see your personal details as a goldmine, selling them to boost ad revenues. The results can range from annoying targeted ads to deeper issues like privacy invasions and even shifts in how you think and behave. Let’s look at what really goes on when this data trade kicks off.

How AI Companions Quietly Build Profiles on You

AI companions don’t just respond to your questions—they listen, learn, and log everything. These systems, powered by machine learning, track your words, tone, and patterns over time. For instance, if you often talk about fitness goals, the AI notes that down. Similarly, mentions of favorite foods or travel spots get filed away. This collection happens seamlessly, often without you noticing, as the AI aims to make responses more tailored.

But here’s where it gets tricky. Many AI platforms state in their policies that they use this info to improve services. However, that “improvement” can include sharing anonymized data with third parties, like advertisers. In the same way that social media tracks your likes and shares, AI girlfriend companions go deeper, capturing raw conversations. As a result, what starts as a casual chat turns into a detailed user profile. Advertisers buy access to these profiles to craft ads that hit your specific interests.

Of course, not all data collection is malicious. Some companies argue it helps create better experiences. Still, the scale is massive. One report highlights how AI systems process vast personal info, leading to risks if not handled right. Think about voice assistants like Alexa or Siri—they record snippets of your life, from shopping lists to emotional vents. When sold, this data fuels hyper-targeted campaigns, making ads feel like they’re reading your mind.

The Hidden Costs When Your Info Hits the Ad Market

Once your personalized data lands in advertisers’ hands, the effects ripple out. Advertisers use it to create campaigns that feel personal, but that personalization can cross into creepy territory. For example, if you’ve confided in an AI about relationship troubles, you might see ads for dating apps or therapy services popping up everywhere. This isn’t random—it’s your data at work.

In spite of promises of anonymity, data often gets de-anonymized through cross-referencing with other sources. Consequently, your privacy takes a hit. Breaches become more likely, as seen in cases where small AI startups lacked strong security, leading to leaks. But it’s not just about leaks. Advertisers can manipulate choices, pushing products that exploit vulnerabilities. In these emotional personalized conversations, users often reveal their deepest fears and joys, making the data incredibly valuable yet vulnerable.

Moreover, this selling model funds free or cheap AI services. Companies like those behind chatbots monetize through ads or data sales, similar to how social platforms operate. As a result, users pay with their privacy instead of cash. They—the companies—benefit from this trade, turning your interactions into revenue streams. Meanwhile, you might end up with overwhelming ads that disrupt your online life.

Here are some key ways this impacts daily routines:

- Increased ad fatigue: Constant targeted ads can make browsing feel invasive, leading to frustration.

- Financial pressures: Ads tailored to your spending habits might encourage impulse buys, straining budgets.

- Social divides: If data reveals preferences, it could reinforce echo chambers, limiting exposure to diverse views.

Admittedly, some see upsides, like relevant recommendations. But the downsides often outweigh them, especially when data flows unchecked.

Mind Games Played by Ultra-Targeted Ads

The psychological side of this data sale is perhaps the most unsettling. When advertisers use AI-gathered info, ads become tools for influence. Research shows that such targeted marketing can trigger negative emotions, like anxiety over perceived needs. For instance, if an AI companion knows you’re stressed about weight, ads for diet products might amplify body image issues.

Likewise, this can lead to manipulation. AI companions, by design, build trust through empathetic responses. When their data feeds ads, it feels like a betrayal. Users might feel shame or isolation, especially if ads highlight personal struggles. In comparison to traditional ads, these are hyper-personal, using psychological profiles to persuade.

Not only that, but it affects decision-making. Ads based on your data can subtly steer choices, from what you buy to how you vote. Hence, over time, this erodes autonomy. I remember once confiding in a chatbot about travel plans, only to be bombarded with hotel deals—it felt like my thoughts weren’t my own anymore.

Eventually, this could worsen mental health. Studies link data-driven ads to stereotypes that harm well-being. Specifically, vulnerable groups, like those dealing with loneliness, might rely on AI companions, making data sales even more exploitative.

Frameworks Trying to Rein in Data Trades

Laws are catching up, but slowly. In Europe, GDPR requires clear consent for data processing, including AI uses. It mandates transparency, so companies must explain if data goes to advertisers. Despite this, loopholes exist, like “legitimate interests” claims.

In the US, CCPA gives residents rights to know about data sales and opt out. Although similar to GDPR, it focuses more on sales than broad processing. Both laws aim to protect personal info, but AI complicates things—data from companions might count as sensitive, triggering stricter rules.

However, enforcement varies. Many AI firms operate globally, dodging full compliance. Thus, users in weaker-regulated areas face higher risks. Newer rules, like the EU AI Act, classify high-risk systems, potentially covering companions that handle personal data.

Even though these frameworks help, gaps remain. For example, anonymized data often isn’t truly anonymous, allowing re-identification. So, while laws provide some shield, they’re not foolproof against clever data monetization.

Cases Where Data Sales Went Off the Rails

Real-world examples show the dangers. Take Meta’s AI tools—they’ve faced scrutiny for using user data in ways that enable psychological profiling for ads. In one case, conversations with AI companions led to ads that felt too invasive, sparking backlash.

Another instance involves chatbot breaches where personal data leaked, exposing users to identity theft. Companies like those behind romantic AI apps have been called out for selling intimate details, risking emotional harm.

On X, users discuss how AI tools sell data instantly for ads, turning tech into marketing machines. Clearly, these stories highlight the need for better safeguards.

Where This Data Trend Might Lead Us

Looking ahead, AI companions could dominate companionship, with markets projected to boom. But data monetization will likely grow, including subscriptions tied to ad-free experiences or outright sales.

Initially, it might seem convenient, but subsequently, risks mount. Experts warn of emotional dependence, where data sales amplify manipulation. In particular, devices like AI wearables could track even more, feeding endless ad cycles.

We, as a society, must push for ethical standards to balance innovation with privacy. Otherwise, the future could see AI shaping relationships in ways that prioritize profit over people.

Ways to Shield Your Details from the Sale

You aren’t powerless. Start by reading privacy policies—opt out where possible. Use privacy-focused AI alternatives that don’t sell data.

Here are practical steps:

- Limit sharing: Avoid sensitive topics in chats.

- Check settings: Turn off data collection features.

- Use VPNs: Mask your online activity.

- Support regulations: Advocate for stronger laws.

Especially, be mindful of free services—they often rely on data sales. By staying informed, you can navigate this landscape safer.

In the end, when AI companions sell your personalized data, it reshapes not just ads, but trust in technology. Their—the advertisers’—gains come at your expense, but awareness and action can tip the scales back. Obviously, the conversation around this is just starting, but staying vigilant ensures your data stays yours.